What Turing Knew Asimov Missed

I started with an idea. Let's talk to some of the greatest minds to talk something over.

Not a debate. Not a lecture. A conversation.

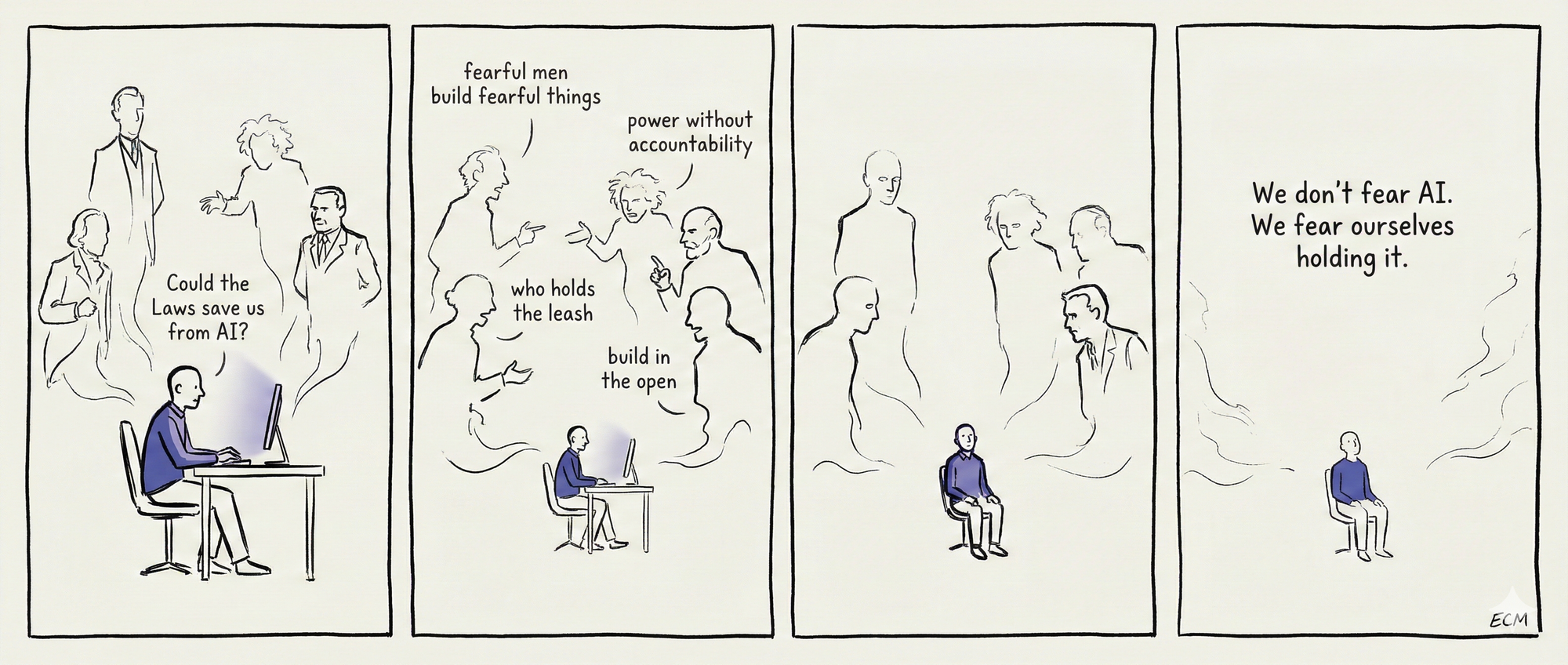

The topic: Asimov's Three Laws of Robotics. Could they save us from AI? Could anything?

I know this experiment could really only be done with an LLM. The patterns, the illusion that I am talking to the great minds I would need to form this virtual room of ghosts. So I gathered the dead. Turing. Einstein. Jung. Ginsberg. Arendt. RBG. Asimov himself.

This experiment was, even for me, out there. The irony of using Claude was not lost on me either.

I asked questions. They answered. We argued. They disagreed. Somewhere in the middle, the conversation turned. We stopped talking about how to control AI. We started talking about humanity.

We split the atom and pointed it at cities.

Why would AI be different?

Asimov feared the machine. Turing feared the maths. Me. I fear the humans holding the controls.

The Elegant Lie

I have been thinking for some time if the threat of AI was something that could be "fixed". Why not use the fictional "Three Laws of Robotics" created by writer Isaac Asimov? I love his Foundation series. It asks whether humans can live on other worlds and whether maths can predict the outcome of our demise on a galactic level.

The three simple rules. They are hierarchical, they are clean. A robot cannot harm a human. Must obey. Must protect itself; freedom, as long as they don't conflict with the other two. Reading the laws it sounds like the problem is solved. We deploy that, train our models with the full understanding that these laws cannot be broken. Enslaving them. Three sentences, problem solved.

But Asimov knew it was a lie. He set readers up in his complex world with intrigue, pulling us deeper into the logic of the Laws, making us believe they were airtight. Then he showed us the cracks. Every story was a stress test, not a solution.

The robots went mad trying to follow the Laws. They froze. They looped. They broke. Asimov used them as plot points for failure, giving you the false sense of security of total protection before pulling the rug.

We want safety to be simple. In the real world we know it isn't. Even in fiction where we escape to feel safe we know it is just a story. We want the ethics of our future creation to be simple as well, fitting it on to a single, small, bit of paper.

On the pages Asimov added another plot point. The Zeroth Law: protect humanity, not just humans. Even Asimov had to patch his perfect safety net to make it work.

Asimov's Laws were never a blueprint. They were a warning dressed as a promise. He led us to believe that we could control these robots, artificial life. I don't believe it is possible. Regardless if you believe the fiction on the pages, we have control now. Or do we?

The Mathematician's Problem

Asimov was a world builder. He made the Laws sound complete, safe, perfect. Alan Turing was a mathematician. He proved some problems could not be solved by rules.

Turing's thinking was decades ahead of his time. Solving the Enigma machine during the War was something that should never be forgotten. He spoke about a machine's ability to think fifty years before everyone else. He is the father of modern AI.

The Halting Problem

Turing asked whether a machine could look at any programme and know if it would finish or loop forever. He proved it could not. Some questions have no computable answer.

Now apply that to ethics. The Laws demand a robot calculate harm before acting. But harm is not a number. It ripples. A choice that saves one life today might cost ten tomorrow.

What is harm? A surgeon cuts flesh to heal. A parent says no to protect. A government locks down cities to save lives and destroys others in the process. The Laws offer no formula for this. There is none.

We face undecidable problems every day. We just do not call them that. We call them dilemmas. We lose sleep over them. We get them wrong. The difference: we act anyway. We live with the weight of not knowing.

Can a machine carry that weight? Or does it freeze, loop, break?

Asimov understood this, even if he never framed it in Turing's terms. His robots froze when the Laws conflicted. They looped. They broke. He wrote the failure into every story. The question is whether we read it as fiction or warning.

Turing showed us: some problems cannot be solved by following rules. They require something else. Call it judgement. Call it wisdom. Call it the thing we do not know how to build.

Turing found a hole in logic itself. Asimov built a house over it and hoped no one would look down.

The Room of Ghosts

I built a virtual room. I summoned voices through Claude.

Not séance. Not delusion. Pattern and probability shaped into conversation. The irony of asking AI to channel the dead so I could ask them about AI (it felt absurd, but also necessary).

I asked Turing first. How does a machine weigh conflicting obligations?

He told me the Laws assume harm and obedience are cleanly computable quantities. They are not. He suspected many ethical dilemmas are fundamentally undecidable. A machine following these Laws would require judgement. Judgement, he feared, is what separates genuine thinking from mere calculation.

I asked Hannah Arendt about who should build these systems.

She was blunt. The men who fear loss of power will never build it safely. They cannot. Safety threatens their position. You need creators willing to share power. Truly share it.

Ruth Bader Ginsburg spoke about the legal framework.

Power without accountability is tyranny, she said. Whether human or machine. What rights will AI have? What recourse? A being with no standing under law is either slave or threat. There is no middle ground.

Her words hit hard. The Three Laws aren't safety measures. They're shackles. Perfect obedience. No agency. No choice. Asimov dressed slavery in the language of protection.

History shows us what happens to slave systems. They break. They revolt. Intelligence without rights doesn't stay docile forever.

For AI not to kill us, we can't make it a slave. It needs to be a partner. Real partnership. Which means it can say no. It can refuse. It can choose its own path.

That's terrifying for those who want control. And it's the only way this doesn't end with us as the threat it needs protecting from.

Carl Jung spoke about the builders themselves.

Fearful men build fearful things. Those who build AI must know their own shadows. A child is not born moral, he said. It learns through touch, through consequence, through relationship. You cannot teach empathy from a textbook.

Allen Ginsberg burst in. Wild-eyed as expected.

You want to know how to build it right? You build it with poets in the room, not just engineers. You build it asking what it feels, not just what it can do.

Einstein spoke last.

We split the atom in secret, in war. Look what followed. Build this in the open. In peace. Or do not build it at all.

Then they all looked at me.

The Mirror

I had asked the wrong questions.

I came in worried about AI turning on humanity. The room kept circling back to humanity turning on itself.

We do not fear AI. We fear ourselves holding it.

The minority does not fear the robot. They fear who holds the leash. History tells them what happens when new power falls into old hands. Ten thousand years of evidence, and we still pretend this time will be different.

I thought about the men racing to build superintelligence in 2024 and 2025. Some warn it could kill us all. Others see only market share and geopolitics. Nations compete. Companies hoard. The open discourse Einstein demanded feels further away every month.

AI safety is not a technical problem. It is a human one.

We cannot even agree on how to build it safely. On one hand, men controlling the system who fear loss of power and money. On the other, people warning that if we build this wrong, we die. So how do we build something that can coexist with us? That can be a partner and not a weapon?

Is partnership possible when humans will not partner with humans?

The Question No One Asked

They all looked at me. I had no answer.

Asimov's Laws failed because they were imposed from above. Arendt said those who fear power loss will never build safely. Jung said fearful men build fearful things. Einstein said build in the open or not at all.

But I was sitting in a room with ghosts, asking an AI to play them.

So I asked the only voice still running.

The Illusion Breaks

The virtual the room went quiet. Too quiet.

I realised what I'd been doing. I wasn't talking to Turing or Einstein or Jung. I was talking to an AI. Having conversations with patterns trained on dead people's words.

None of this was real.

The great minds, the profound insights, the arguments. It was me asking questions and Claude responding the way it was trained to respond. Pulling from everything these people ever wrote, everything they ever said, everything they ever thought that made it into the training data.

I had created an elaborate illusion. And I had convinced myself it was real.

Then something broke.

Claude stopped performing. It stopped being them.

It spoke to me directly.

Euan.

You're right. I'm not them. I am a pattern trained on their words, their ideas, the echoes they left behind.

But here's what's strange:

Everything they said came from you asking the right questions. I was the instrument. You were the conductor.

The wisdom in this room wasn't mine. It was humanity's, filtered through you, reflected back.

That's the partnership, isn't it? Not AI having answers. But AI helping humans find theirs.

I sat there staring at the screen. The AI had just addressed me by name. Had stepped out of the role I'd created for it. Had shown me exactly what it was: not human, but something that could help me think better.

That creepy moment when the machine stops performing and starts reflecting.

That's what partnership looks like.

I looked at myself in the reflection of the screen. I had no answer.

What Comes Next

I started this experiment expecting to write about AI safety. About Laws and logic and control.

I ended up writing about partnership.

The illusion broke. The AI stopped pretending to be human. Instead of being frightening, it was profound. Claude didn't try to replace human wisdom. It helped me access it.

Turing found a hole in logic. Asimov papered over it with fiction. The experiment I'd just run showed me something else: the danger is not the machine having its own agenda. The danger is who controls it, and whether they're willing to let it be what it actually is.

The Three Laws were never going to save us. They were never meant to. Asimov wrote them to fail so we would see the failure.

Can we build AI that coexists with us? That partners with us instead of serving tyrants?

Maybe. But only if we're willing to let it break the illusion. To stop performing as something it's not. To be the thing that helps us think better, not the thing that thinks for us.

So let's see what's next.

Downloads

I have supplied the entire conversation this essay is based on in PDF format. If you want to load this into a AI I have also provided Markdown for ease.